I’m drawing closer to the end of the alphabet. While I have got choices for ‘V’ and ‘W’ set, I’ll admit that I’m still looking for something that inspires me in the last couple letters. Such inspiration might come from anywhere. HowardAt58, of that WordPress blog, gave me the notion for today’s entry.

Uncountable.

What are we doing when we count things?

Maybe nothing. We might be counting just to be doing something. Or we might be counting because we want to do nothing. Counting can be a good way into a restful state. Fair enough. Just because we do something doesn’t mean we care about the result.

Suppose we do care about the result of our counting. Then what is it we do when we count? The mechanism is straightforward enough. We pick out things and say, or imagine saying, “one, two, three, four,” and so on. Or we at least imagine the numbers along with the things being numbered. When we run out of things to count, we take whatever the last number was. That’s how many of the things there were. Why are there eight light bulbs in the chandelier fixture above the dining room table? Because there are not nine.

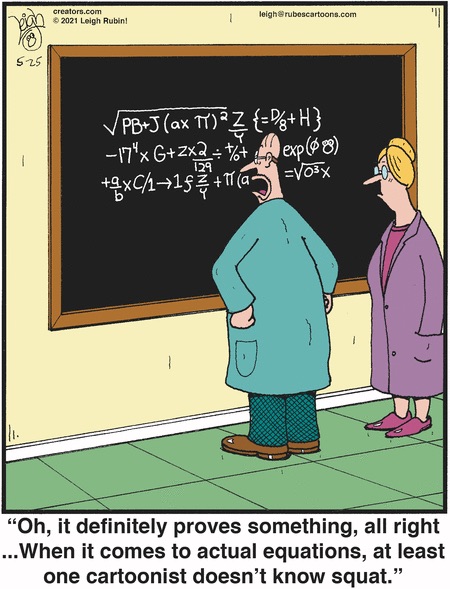

That’s how lay people count anyway. Mathematicians would naturally have a more sophisticated view of the business. A much more powerful counting scheme. Concepts in counting that go far beyond what you might work out in first grade.

Yeah, so that’s what most of us would figure. Things don’t get much more sophisticated than that, though. This probably is because the idea of counting is tied to the theory of sets. And the theory of sets grew, in part, to come up with a logically solid base for arithmetic. So many of the key ideas of set theory are so straightforward they hardly seem to need explaining.

We build the idea of “countable” off of the nice, familiar numbers 1, 2, 3, and so on. That set’s called the counting numbers. They’re the numbers that everybody seems to recognize as numbers. Not just people. Even animals seem to understand at least the first couple of counting numbers. Sometimes these are called the natural numbers.

Take a set of things we want to study. We’re interested in whether we can match the things in that set one-to-one with the things in the counting numbers. We don’t have to use all the counting numbers. But we can’t use the same counting number twice. If we’ve matched one chandelier light bulb with the number ‘4’, we mustn’t match a different bulb with the same number. Similarly, if we’ve got the number ‘4’ matched to one bulb, we mustn’t match ‘4’ with another bulb at the same time.

If we can do this, then our set’s countable. If we really wanted, we could pick the counting numbers in order, starting from 1, and match up all the things with counting numbers. If we run out of things, then we have a finitely large set. The last number we used to match anything up with anything is the size, or in the jargon, the cardinality of our set. We might not care about the cardinality, just whether the set is finite. Then we can pick counting numbers as we like in no particular order. Just use whatever’s convenient.

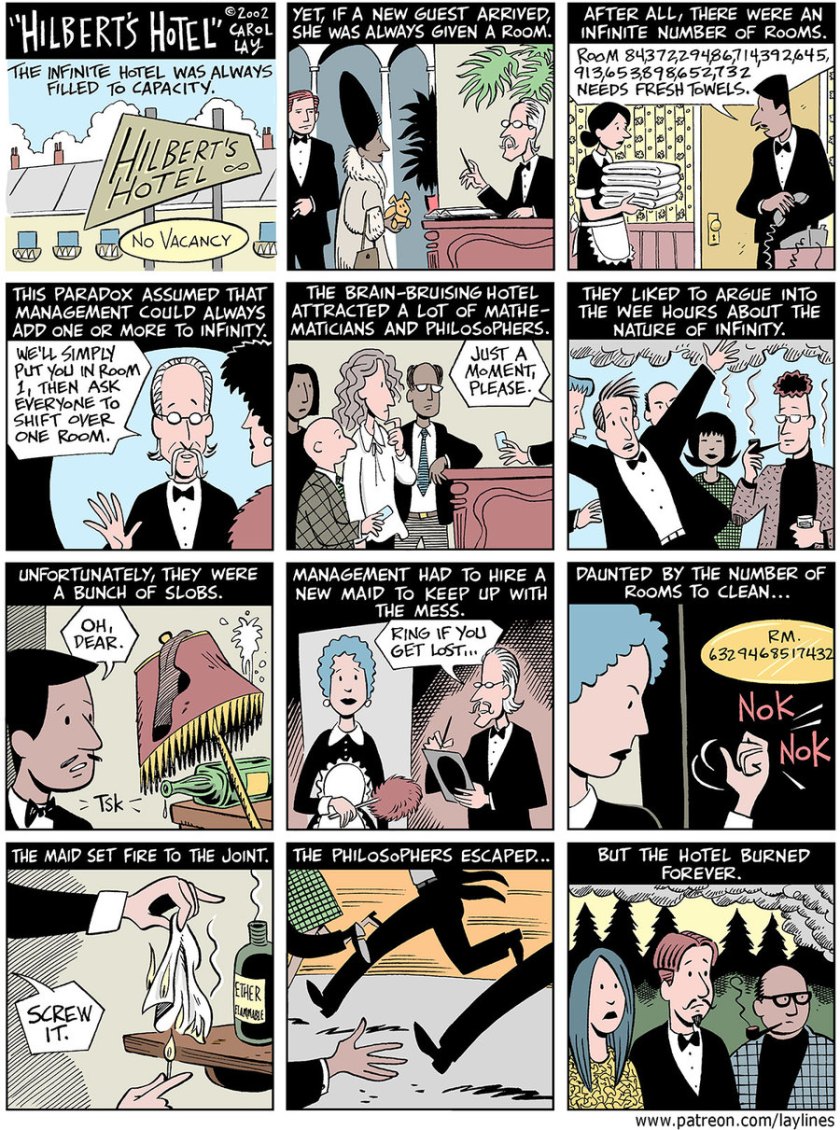

But what if we don’t run out of things? And it’s possible we won’t. Suppose our set is the negative whole numbers: -1, -2, -3, -4, -5, and so on. We can match each of those to a counting number many ways. We always can. But there’s an easy way. Match -1 to 1, match -2 to 2, match -3 to 3, and so on. Why work harder than that? We aren’t going to run out of negative whole numbers. And we aren’t going to find any we can’t match with some counting number. And we aren’t going to have to match two different negative numbers to the same counting number. So what we have here is an infinitely large, yet still countable, set.

So a set of things can be countable and finite. It can be countable and infinite. What else is there to be?

There must be something. It’d be peculiar to have a classification that everything was in, after all. At least it would be peculiar except for people studying what it means to exist or to not exist. And most of those people are in the philosophy department, where we’re scared of visiting. So we must mean there’s some such thing as an uncountable set.

The idea means just what you’d guess if you didn’t know enough mathematics to be tricky. Something is uncountable if it can’t be counted. It can’t be counted if there’s no way to match it up, one thing-to-one thing, with the counting numbers. We have to somehow run out of counting numbers.

It’s not obvious that we can do that. Some promising approaches don’t work. For example, the set of all the integers — 1, 2, 3, 4, 5, and all that, and 0, and the negative numbers -1, -2, -3, -4, -5, and so on — is still countable. Match the counting number 1 to 0. Match the counting number 2 to 1. Match the counting number 3 to -1. Match 4 to 2. Match 5 to -2. Match 6 to 3. Match 7 to -3. And so on.

Even ordered pair of the counting numbers don’t do it. We can match the counting number 1 to the pair (1, 1). Match the counting number 2 to the pair (2, 1). Match the counting number 3 to (1, 2). Match 4 to (3, 1). Match 5 to (2, 2). Match 6 to (1, 3). Match 7 to (4, 1). Match 8 to (3, 2). And so on. We can achieve similar staggering results with ordered triplets, quadruplets, and more. Ordered pairs of integers, positive and negative? Longer to do, yes, but just as doable.

So are there any uncountable things?

Sure. Wouldn’t be here if there weren’t. For example: think about the set that’s all the ways to pick things from a set. I sense your confusion. Let me give you an example. Suppose we have the set of three things. They’re the numbers 1, 2, and 3. We can make a bunch of sets out of things from this set. We can make the set that just has ‘1’ in it. We can make the set that just has ‘2’ in it. Or the set that just has ‘3’ in it. We can also make the set that has just ‘1’ and ‘2’ in it. Or the set that just has ‘2’ and 3′ in it. Or the set that just has ‘3’ and ‘1’ in it. Or the set that has all of ‘1’, ‘2’, and ‘3’ in it. And we can make the set that hasn’t got any of these in it. (Yes, that does too count as a set.)

So from a set of three things, we were able to make a collection of eight sets. If we had a set of four things, we’d be able to make a collection of sixteen sets. With five things to start from, we’d be able to make a collection of thirty-two sets. This collection of sets we call the “power set” of our original set, and if there’s one thing we can say about it, it’s that it’s bigger than the set we start from.

The power set for a finite set, well, that’ll be much bigger. But it’ll still be finite. Still be countable. What about the power set for an infinitely large set?

And the power set of the counting numbers, the collection of all the ways you can make a set of counting numbers, is really big. Is it uncountably big?

Let’s step back. Remember when I said mathematicians don’t get “much more” sophisticated than matching up things to the counting numbers? Here’s a little bit of that sophistication. We don’t have to match stuff up to counting numbers if we like. We can match the things in one set to the things in another set. If it’s possible to match them up one-to-one, with nothing missing in either set, then the two sets have to be the same size. The same cardinality, in the jargon.

So. The set of the numbers 1, 2, 3, has to have a smaller cardinality than its power set. Want to prove it? Do this exactly the way you imagine. You run out of things in the original set before you run out of things in the power set, so there’s no making a one-to-one matchup between the two.

With the infinitely large yet countable set of the counting numbers … well, the same result holds. It’s harder to prove. You have to show that there’s no possible way to match the infinitely many things in the counting numbers to the infinitely many things in the power set of the counting numbers. (The easiest way to do this is by contradiction. Imagine that you have made such a matchup, pairing everything in your power set to everything in the counting numbers. Then you go through your matchup and put together a collection that isn’t accounted for. Whoops! So you must not have matched everything up in the first place. Why not? Because you can’t.)

But the result holds. The power set of the counting numbers is some other set. It’s infinitely large, yes. And it’s so infinitely large that it’s somehow bigger than the counting numbers. It is uncountable.

There’s more than one uncountably large set. Of course there are. We even know of some of them. For example, there’s the set of real numbers. Three-quarters of my readers have been sitting anxiously for the past eight paragraphs wondering if I’d ever get to them. There’s good reason for that. Everybody feels like they know what the real numbers are. And the proof that the real numbers are a larger set than the counting numbers is easy to understand. An eight-year-old could master it. You can find that proof well-explained within the first ten posts of pretty much every mathematics blog other than this one. (I was saving the subject. Then I finally decided I couldn’t explain it any better than everyone else has done.)

Are the real numbers the same size, the same cardinality, as the power set of the counting numbers?

Sure, they are.

No, they’re not.

Whichever you like. This is one of the many surprising mathematical results of the surprising 20th century. Starting from the common set of axioms about set theory, it’s undecidable whether the set of real numbers is as big as the power set of the counting numbers. You can assume that it is. This is known as the Continuum Hypothesis. And you can do fine mathematical work with it. You can assume that it is not. This is known as the … uh … Rejecting the Continuum Hypothesis. And you can do fine mathematical work with that. What’s right depends on what work you want to do. Either is consistent with the starting hypothesis. You are free to choose either, or if you like, neither.

My understanding is that most set theory finds it more productive to suppose that they’re not the same size. I don’t know why this is. I know enough set theory to lead you to this point, but not past it.

But that the question can exist tells you something fascinating. You can take the power set of the power set of the counting numbers. And this gives you another, even vaster, uncountably large set. As enormous as the collection of all the ways to pick things out of the counting numbers is, this power set of the power set is even vaster.

We’re not done. There’s the power set of the power set of the power set of the counting numbers. And the power set of that. Much as geology teaches us to see Deep Time, and astronomy Deep Space, so power sets teach us to see Deep … something. Deep Infinity, perhaps.

![A numeral 2 is on the page. Alice, offscreen, asks: 'Petey, what letter is that?' Petey: 'It's the *number* two.' Alice: 'Is it happy or sad?' Petey: 'I don't know.' Alice: 'It's a girl, right? And it's green?' The numeral is now green, has long hair, and a smile. Petey: 'Well --- ' Alice: 'Does she wear a hat? Like a beret?' 'A hat?' The numeral now has a beret. Alice: 'Does she have a pet? I'll give her a few hamsters. Petey: 'A pet?' There are a copule hamsters around the 2 now. Alice: 'She likes *this* number.' Petey: 'Why --- ' The other number is a 5. Alice: 'But not *this* number. [ It's a 9. ] Because he's not looking at her.' The 2 is frowning at the 9. Petey: 'How do you --- ' Alice: 'What kind of music does she listen to?' Petey: 'ALICE! Stop asking me all this stuff! *I'm* no good at math!' Alice, upset: 'So it's up to *me* to be the family genius ... '](https://nebusresearch.files.wordpress.com/2022/07/cul-de-sac_richard-thompson_2022-07july-03.jpeg?w=840&h=410)

as a pleasant, well-behaved function, but is likely to see

as a troublesome one. These examples all tie to how easy they are to do other stuff with. But it is natural to think fondly of things you see a lot that work nicely for you. These don’t always have to be “nice” things. If you want to test an idea about continuous curves, for example, it’s convenient to have handy the Koch curve. It’s this spiky fractal that’s nothing but corners, and you can use it to see if the idea holds up. Do this enough, and you come to see a reliable partner to your work.

![[ You arrive at the Infinite Hotel ... but the concierge says *all* infinity of their rooms are booked. Luckily, you know MATH. 'Infinity = Infinity + 1'. You explain that if you just ask each guest to move one room over ... ] There's a large man in diapers with a sock puppet on his hand in the first door. [ Yeah ... math's not solving that. ] You drive away.](https://nebusresearch.files.wordpress.com/2018/05/john-mcnamee_pie-comic_4-may-2018.gif?w=840&h=1145)