I’m at a point in my Why Stuff Can Orbit essays where I need to talk about derivatives. If I don’t then I’m going to be stuck with qualitative talk that’s too hard to focus on anything. But it’s a big subject. It’s about two-fifths of a Freshman Calculus course and one of the two main legs of calculus. So I want to pull out a quick essay explaining the important stuff.

Derivatives, also called differentials, are about how things change. By “things” I mean “functions”. And particularly I mean functions which have as a domain that’s in the real numbers and a range that’s in the real numbers. That’s the starting point. We can define derivatives for functions with domains or ranges that are vectors of real numbers, or that are complex-valued numbers, or are even more exotic kinds of numbers. But those ideas are all built on the derivatives for real-valued functions. So if you get really good at them, everything else is easy.

Derivatives are the part of calculus where we study how functions change. They’re blessed with some easy-to-visualize interpretations. Suppose you have a function that describes where something is at a given time. Then its derivative with respect to time is the thing’s velocity. You can build a pretty good intuitive feeling for derivatives that way. I won’t stop you from it.

A function’s derivative is itself a function. The derivative doesn’t have to be constant, although it’s possible. Sometimes the derivative is positive. This means the original function increases as whatever its variable is increases. Sometimes the derivative is negative. This means the original function decreases as its variable increases. If the derivative is a big number this means it’s increasing or decreasing fast. If the derivative is a small number it’s increasing or decreasing slowly. If the derivative is zero then the function isn’t increasing or decreasing, at least not at this particular value of the variable. This might be a local maximum, where the function’s got a larger value than it has anywhere else nearby. It might be a local minimum, where the function’s got a smaller value than it has anywhere else nearby. Or it might just be a little pause in the growth or shrinkage of the function. No telling, at least not just from knowing the derivative is zero.

Derivatives tell you something about where the function is going. We can, and in practice often do, make approximations to a function that are built on derivatives. Many interesting functions are real pains to calculate. Derivatives let us make polynomials that are as good an approximation as we need and that a computer can do for us instead.

Derivatives can change rapidly. That’s all right. They’re functions in their own right. Sometimes they change more rapidly and more extremely than the original function did. Sometimes they don’t. Depends what the original function was like.

Not every function has a derivative. Functions that have derivatives don’t necessarily have them at every point. A function has to be continuous to have a derivative. A function that jumps all over the place doesn’t really have a direction, not that differential calculus will let us suss out. But being continuous isn’t enough. The function also has to be … well, we call it “differentiable”. The word at least tells us what the property is good for, even if it doesn’t say how to tell if a function is differentiable. The function has to not have any corners, points where the direction suddenly and unpredictably changes.

Otherwise differentiable functions can have some corners, some non-differentiable points. For instance the height of a bouncing ball, over time, looks like a bunch of upside-down U-like shapes that suddenly rebound off the floor and go up again. Those points interrupting the upside-down U’s aren’t differentiable, even though the rest of the curve is.

It’s possible to make functions that are nothing but corners and that aren’t differentiable anywhere. These are done mostly to win quarrels with 19th century mathematicians about the nature of the real numbers. We use them in Real Analysis to blow students’ minds, and occasionally after that to give a fanciful idea a hard test. In doing real-world physics we usually don’t have to think about them.

So why do we do them? Because they tell us how functions change. They can tell us where functions momentarily don’t change, which are usually important. And equations with differentials in them, known in the trade as “differential equations”. They’re also known to mathematics majors as “diffy Q’s”, a name which delights everyone. Diffy Q’s let us describe physical systems where there’s any kind of feedback. If something interacts with its surroundings, that interaction’s probably described by differential equations.

So how do we do them? We start with a function that we’ll call ‘f’ because we’re not wasting more of our lives figuring out names for functions and we’re feeling smugly confident Turkish authorities aren’t interested in us.

f’s domain is real numbers, for example, the one we’ll call ‘x’. Its range is also real numbers. f(x) is some number in that range. It’s the one that the function f matches with the domain’s value of x. We read f(x) aloud as “the value of f evaluated at the number x”, if we’re pretending or trying to scare Freshman Calculus classes. Really we say “f of x” or maybe “f at x”.

There’s a couple ways to write down the derivative of f. First, for example, we say “the derivative of f with respect to x”. By that we mean how does the value of f(x) change if there’s a small change in x. That difference matters if we have a function that depends on more than one variable, if we had an “f(x, y)”. Having several variables for f changes stuff. Mostly it makes the work longer but not harder. We don’t need that here.

But there’s a couple ways to write this derivative. The best one if it’s a function of one variable is to put a little ‘ mark: f'(x). We pronounce that “f-prime of x”. That’s good enough if there’s only the one variable to worry about. We have other symbols. One we use a lot in doing stuff with differentials looks for all the world like a fraction. We spend a lot of time in differential calculus explaining why it isn’t a fraction, and then in integral calculus we do some shady stuff that treats it like it is. But that’s  . This also appears as

. This also appears as  . If you encounter this do not cross out the d’s from the numerator and denominator. In this context the d’s are not numbers. They’re actually operators, which is what we call a function whose domain is functions. They’re notational quirks is all. Accept them and move on.

. If you encounter this do not cross out the d’s from the numerator and denominator. In this context the d’s are not numbers. They’re actually operators, which is what we call a function whose domain is functions. They’re notational quirks is all. Accept them and move on.

How do we calculate it? In Freshman Calculus we introduce it with this expression involving limits and fractions and delta-symbols (the Greek letter that looks like a triangle). We do that to scare off students who aren’t taking this stuff seriously. Also we use that formal proper definition to prove some simple rules work. Then we use those simple rules. Here’s some of them.

- The derivative of something that doesn’t change is 0.

- The derivative of xn, where n is any constant real number — positive, negative, whole, a fraction, rational, irrational, whatever — is n xn-1.

- If f and g are both functions and have derivatives, then the derivative of the function f plus g is the derivative of f plus the derivative of g. This probably has some proper name but it’s used so much it kind of turns invisible. I dunno. I’d call it something about linearity because “linearity” is what happens when adding stuff works like adding numbers does.

- If f and g are both functions and have derivatives, then the derivative of the function f times g is the derivative of f times the original g plus the original f times the derivative of g. This is called the Product Rule.

- If f and g are both functions and have derivatives we might do something called composing them. That is, for a given x we find the value of g(x), and then we put that value in to f. That we write as f(g(x)). For example, “the sine of the square of x”. Well, the derivative of that with respect to x is the derivative of f evaluated at g(x) times the derivative of g. That is, f'(g(x)) times g'(x). This is called the Chain Rule and believe it or not this turns up all the time.

- If f is a function and C is some constant number, then the derivative of C times f(x) is just C times the derivative of f(x), that is, C times f'(x). You don’t really need this as a separate rule. If you know the Product Rule and that first one about the derivatives of things that don’t change then this follows. But who wants to go through that many steps for a measly little result like this?

- Calculus textbooks say there’s this Quotient Rule for when you divide f by g but you know what? The one time you’re going to need it it’s easier to work out by the Product Rule and the Chain Rule and your life is too short for the Quotient Rule. Srsly.

- There’s some functions with special rules for the derivative. You can work them out from the Real And Proper Definition. Or you can work them out from infinitely-long polynomial approximations that somehow make sense in context. But what you really do is just remember the ones anyone actually uses. The derivative of ex is ex and we love it for that. That’s why e is that 2.71828(etc) number and not something else. The derivative of the natural log of x is 1/x. That’s what makes it natural. The derivative of the sine of x is the cosine of x. That’s if x is measured in radians, which it always is in calculus, because it makes that work. The derivative of the cosine of x is minus the sine of x. Again, radians. The derivative of the tangent of x is um the square of the secant of x? I dunno, look that sucker up. The derivative of the arctangent of x is

and you know what? The calculus book lists this in a table in the inside covers. Just use that. Arctangent. Sheesh. We just made up the “secant” and the “cosecant” to haze Freshman Calculus students anyway. We don’t get a lot of fun. Let us have this. Or we’ll make you hear of the inverse hyperbolic cosecant.

and you know what? The calculus book lists this in a table in the inside covers. Just use that. Arctangent. Sheesh. We just made up the “secant” and the “cosecant” to haze Freshman Calculus students anyway. We don’t get a lot of fun. Let us have this. Or we’ll make you hear of the inverse hyperbolic cosecant.

So. What’s this all mean for central force problems? Well, here’s what the effective potential energy V(r) usually looks like:

So, first thing. The variable here is ‘r’. The variable name doesn’t matter. It’s just whatever name is convenient and reminds us somehow why we’re talking about this number. We adapt our rules about derivatives with respect to ‘x’ to this new name by striking out ‘x’ wherever it appeared and writing in ‘r’ instead.

Second thing. C is a constant. So is n. So is L and so is m. 2 is also a constant, which you never think about until a mathematician brings it up. That second term is a little complicated in form. But what it means is “a constant, which happens to be the number  , multiplied by r-2”.

, multiplied by r-2”.

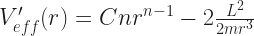

So the derivative of Veff, with respect to r, is the derivative of the first term plus the derivative of the second. The derivatives of each of those terms is going to be some constant time r to another number. And that’s going to be:

And there you can divide the 2 in the numerator and the 2 in the denominator. So we could make this look a little simpler yet, but don’t worry about that.

OK. So that’s what you need to know about differential calculus to understand orbital mechanics. Not everything. Just what we need for the next bit.